Power Consumption & Temperatures

The move to the 55nm process paid off for Nvidia, and as you can see the GeForce 9800 GTX+ is more efficient than the Radeon HD 4850 using 7% less power.

The Radeon HD 4830 on the other hand used much less power than the GeForce 9800 GT at idle, while only using slightly more under load. The Radeon HD 4760 was very energy efficient, as was the Radeon HD 4650, which happened to use the least amount of power out of all the cards tested.

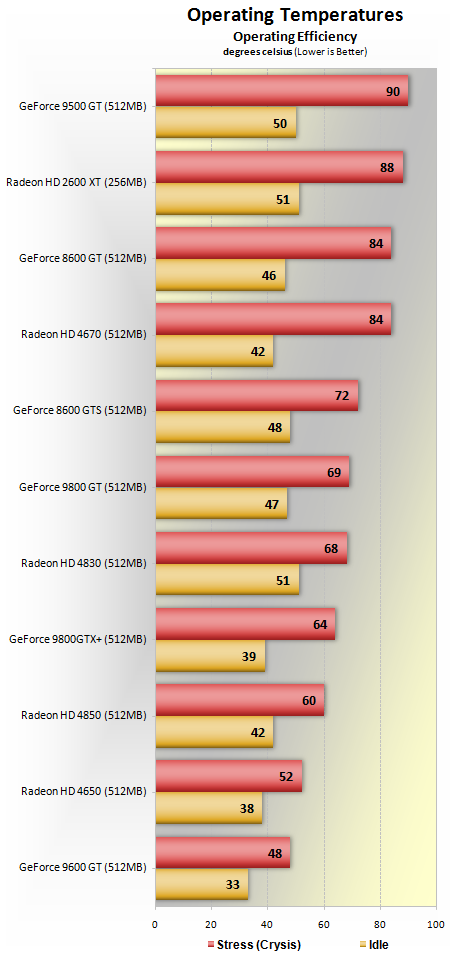

Please keep in mind that almost none of these cards feature the reference cooler and therefore temperatures are going to be better (lower) than those that do. The list of exact cards being used can be found on the Test System Specs page.

Amazingly, under load the GeForce 9500 GT was the hottest graphics card tested, followed by the old Radeon HD 2600XT. While the GeForce 9500 GT reached 90 degrees, the Radeon HD 2600XT peaked at 88 degrees. The GeForce 8600 GT and Radeon HD 4670 were slightly cooler with a load temperature of 84 degrees. The temperatures then dropped off quite considerably, with the GeForce 8600 GTS next in line with a load temp of 72 degrees.

Now, the GeForce 8600 GTS should theoretically run hotter than the 8600 GT, but due to a better cooler being installed on the GTS this was not the case.

The GeForce 9800 GT produced a 69 degree result, while the new Radeon HD 4830 worked at 68 degrees. The GeForce 9800 GTX+ was impressive with a load temperature of just 64 degrees, but the Radeon HD 4850 was slightly better at 60 degrees.

Once again, we included this test just for overall reference but because of the variety in heatsinks and coolers shipping from the different manufacturers, these numbers will hardly compare to every card selling out there with the corresponding GPU. In that sense, the power consumption test above should prove more useful.